This article tackles the problem of two microphone frequency domain speech enhancement in a stationary background noise environment. In all frequency domain noise reduction algorithms, the critical ingredient is the estimation of the signal to noise ratio (SNR). Different algorithms for single microphone signals such as spectral subtraction, wiener filtering and minimum mean square estimation (MMSE) all end up using estimates of the posterior SNR with the MMSE also utilizing the prior SNR estimate. These estimates end up introducing music noise because of errors in the SNR estimates. Further, for real time operating systems (RTOS), a faster and more efficient approach may be required. We describe a coherence based approach to estimate the posterior SNRs.

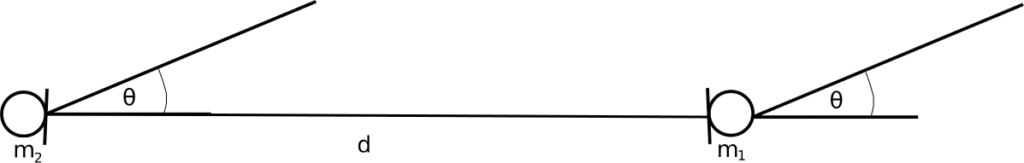

Consider a two microphones array as shown in Figure 1:

Figure 1: Two microphone array

Suppose an observed signal is given by:

where, without loss of generality, is the delay between microphone

and microphone 1. The noise signals are zero mean and uncorrelated with the speech signal. Under the mild assumption that the noise signals at both microphones are correlated such that

, it can be shown that the coherence between the two microphone signals will obey:

where is the sampling rate and

is the incident angle for the speech signal.

is the speed of sound in free space. From the equation above, the SNRs for each frequency bin can be estimated using:

It can be seen that the angle of arrival needs to be calculated also, but this can be done by using any of the well known algorithms such as GCC-PHAT. $\gamma(w)$ can also be estimated from the non speech frames. With the observation that most processing for speech enhancement is done in the frequency domain, the extra computational burden will be very minimal.

VOCAL Technologies offers custom designed solutions for beamforming with a robust voice activity detector, acoustic echo cancellation and noise suppression. Our custom implementations of such systems are meant to deliver optimum performance for your specific beamforming task. Contact us today to discuss your solution!